These are all the torrents currently managed and released by Anna’s Archive. For more information, see “Our projects” on the Datasets page. For Library Genesis and Sci-Hub torrents, the Libgen.li torrents page maintains an overview.

These torrents are not meant for downloading individual books. They are meant for long-term preservation.

Torrents with “aac” in the filename use the Anna’s Archive Containers format. Torrents that are crossed out have been superseded by newer torrents, for example because newer metadata has become available. Some torrents that have messages in their filename are “adopted torrents”, which is a perk of our top tier “Amazing Archivist” membership.

You can help out enormously by seeding torrents that are low on seeders. If everyone who reads this chips in, we can preserve these collections forever. This is the current breakdown:

| Status | Torrents | Size | Seeders |

|---|---|---|---|

| 🔴 | 54 | 154.0TB | <4 |

| 🟡 | 183 | 92.5TB | 4–10 |

| 🟢 | 111 | 17.2TB | >10 |

IMPORTANT: If you seed large amounts of our collection (50TB or more), please contact us at AnnaArchivist@proton.me so we can let you know when we deprecate any large torrents.

It seems the majority of the torrents with poor seeder count are in the 1.5TB+ range. I just simply don’t have the storage for that. Most everything in the 0-300GB range is pretty well covered.

Agreed. I’d like to share a bit of disk storage but I only have 2 TB and I need that for my own consumption.

Give us smaller torrents (e.g. 50GB parts) instead.You can easilly select those files from a given torrent which you would like to download and seed.

How do you make the torrent software automatically rotate which content it preserves based on the files with the fewest copies in the Swarm ? I don’t want to manage this manually or have to select files by hand.

That’s an answer I don’t have. I would just focus on grabbing what has the fewest copies for the snap-shot in time when I am downloading the files. Other archivers will grab what needs more copies at the time they download, just as you and I are basing our downloads of what has the fewest existing copies weighed against what others have seeded before us.

Its a churn of archivers “rotating” the content we each choose to preserve(almost entirely without regard for WHAT the specific content is).

If enough people were too active about deleting what they’ve downloaded that has “enough” copies and replacing it with content that doesn’t … okay, that’s unlikely, but we still need more seeders in general, and those of us who keep on seeding that which we’ve already downloaded make the decision of what needs more copies seeded in the future easier to make for new archivists and/or those who have invested in more capacity.

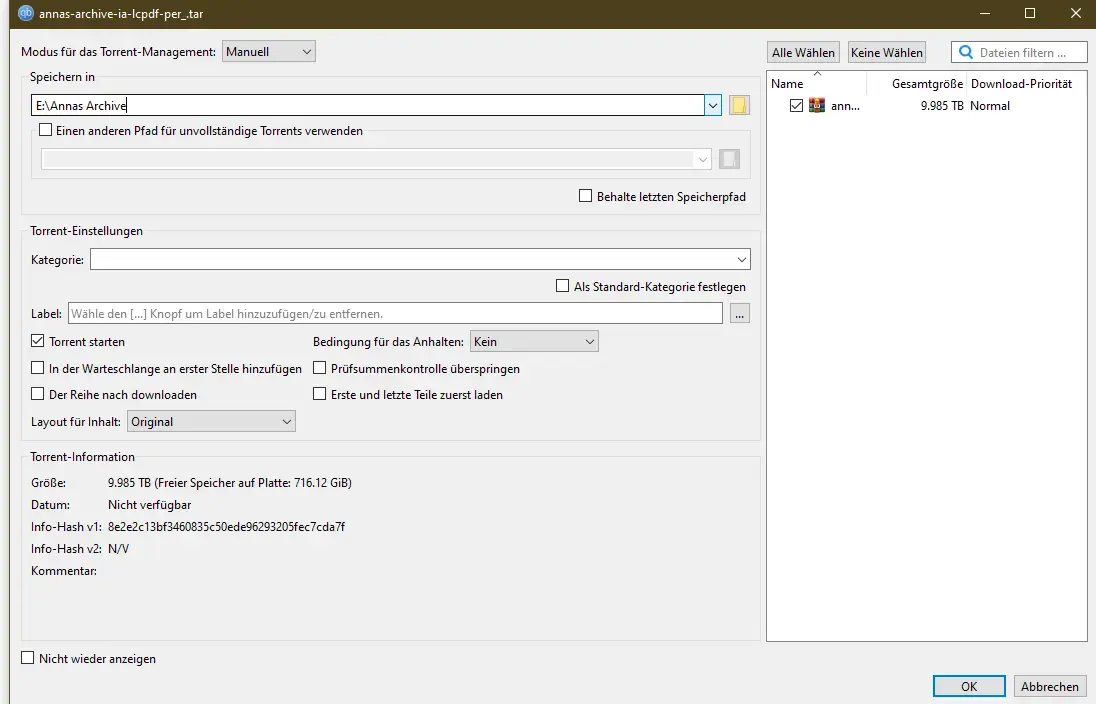

How do I do this? When I open up the magnet link, it only seems to give me one checkbox for the entire 10 TB thing. Sure, I can check “download first and last parts before anything else” but it would still try to download all 10 TB, no?

Edit: Nevermind, I didn’t grab one of the files that has aac in its name.

Answered already here: https://lemmy.dbzer0.com/comment/5155010

Already well enough seeded.

Also: Partial downloads on torrents are

evilnot great[1](https://lemmy.dbzer0.com/comment/5156407). (Still) Don’t do that.Edit: Updated my stance on partial download.

reason for the change ↩︎

Why are partial downloads evil?

Being not a full seeder you are only giving what you have.

If another seeder quits, nobody can complete the torrent.

Not great.Not great isn’t the same as evil. They’re still sharing what they have.

I updated my comment. I kindly ask for feedback if possible

Okay, but you’re providing more to the swarm than if you weren’t participating.

True. Can’t argue with that logic because I use it as well

This is a bad take, I want to auto seed the last 1% of every torrent which is the least available and automatically rotate it. Torrent infrastructure needs to stop sucking.

Me with only 250GB ssd in an intel nuc lmao

That’s my rented seedbox I sync my downloads from.

At home I have an intel nuc 11th gen with 8tb attached via USB.

There are smaller torrents < 80gb go take a look on their website

Those have enough seeds for now.

Can you partially seed some of a larger torrent?

Yes. When downloading the torrent, go to the files list for that torrent and un-check boxes. Resist the urge to leave only the first files in the list(as most people will do this leaving few-if-anyone seeding the rest), **and try instead to grab files from the middle or end, or just be random about it.

When the torrent finishes downloading the files you’ve selected, it will automatically seed those portions of the torrent which you have downloaded.

EDIT: I just remembered, some torrent programs will actually show you the seed ratio per file in the torrent. There are reasons hardly anyone is (sincerely) trying to reinvent this wheel.

This is true, but I don’t know if you’d be counted as a seeder on that list though if you don’t have the full torrent.

I don’t think you would but you would count towards the “availability” that’s listed, so ten leechers each with a unique 10% of the torrent would give a 1.0 availability for a new lecher who wanted to become a seed.

This is too much work, someone automate this with the smartest algorithm and I will preserve with the power of 10x 2tb 10gbe seedbox.

Automatically auto seed on a rotation the 10% least available file of each torrent Percentage should be adjustable globally and per torrent.

Who thought it was a good idea to have single torrents of multiple TB??

Bruh, this is a terrible way to share this. Why not torrents of the raw material in predefined categories that won’t change? Like “1984 - Sci-Fi - English - A-N”, “1984 - Sci-Fi - English - O-Z”, “1990 - Biology”, “2012 - Physics”. Then people would actually even download this to use it themselves, instead of some archive that has to be extracted and will take a multitude of the space again.

The hell am I going to do with a 300GB archive file that I cannot even look into? I might as well be storing an encrypted blob 300GB large or just reducing the size of my partition by 300GB.

It’s great that people want to preserve human knowledge, but there surely are better ways to do this.

If you can do better let’s see it. This post is for altruists and archivists… clearly you’re neither.

This is just bad communication, beating down on people that are delivering constructive criticism.

Way to gatekeep. Don’t you think it would be better if more people could contribute bandwidth and storage with what they have instead of buy a new hardrive? Wouldn’t you want more redundancy, instead of less?

I don’t think they use an indexable compression as well, right? That essentially kills stuff for me.

The easiest way to host is not TB/PB sized archives but indices and slices for those.

It easier for a lot of us to download a few gigs and share that, rather than download TB/PB sized archives.

WTF he’s raising a good point

It’s unclear to me how these torrents are used. If individual books are not downloaded from them, is this only to make it possible to create similar sites in the future, in case this one is taken down?

They are meant for long-term preservation.

This is basically a “distributed backup” of the entire database. The torrents are not actively serving files- they’re there to store multiple copies of the main database across the globe so that the entire database can be recovered (by anyone with the requisite knowledge, mind you) in the event that something happens to the original Anna’s Archive team or the main database is lost/seized by “law enforcement”.

It’s equivalent to how backup managers in ye olden days would make broken up piece files of a certain size that could fit onto a CD or DVD, so you could fit the entire contents of a large 20+GB hard drive onto multiple smaller media. The backup itself is not accessed unless your main hard drive crashes, in which case you reassemble all the individual pieces back into your complete OS environment after replacing the hard drive.

I have a spare 100 or so Terabytes and I can fit roughly another quarter petabyte in my server. I would like to help. I will look more into this potentially tomorrow when I have some free time. The preservation of knowledge is too important.

my man!!

I have a few questions as you appear to be part of the archive or at least very familiar with it.

Roughly how often are the archives updated?

Do you guys already have a proper backup method or are your seeds acting as that backup?

Any idea realistically how much bigger the archive can get data wise in the next few years? Estimates or educated guesses are fine. I want to know how much I need to plan in advance.

If I take the whole archive, must I deploy it or can it be searched through if I have the whole thing and I want something specific out of it?

Unfortunately I don’t have enough storage space to seed. Is there any other way to help?

The torrents are broken up into smaller pieces - don’t be intimidated by the big TB numbers from the sum total. Otherwise donations are always useful.

donate!

Torrents that are crossed out have been superseded by newer torrents, for example because newer metadata has become available.

Wait, fr? These aren’t even the final versions of the torrents? You might start seeding multiple terabytes of data and someone goes “lol nm here’s _final_final_3.aac” Absolutely bonkers way to do this. Put hard drives in an arctic vault or something, it would make more sense than this.

Id happily seed a tb or so of the most in-danger torrents. My internet aint much but my old pc is almost always on.

How do I know which of the piece torrents are high or low on seeders? Maybe I’m just being special or can’t see it on mobile but is there no way to check each torrent’s health without actually downloading every piece and putting it in my torrent client?The health is listed next to each piece at this URL: https://annas-archive.org/torrents

Ah perfect. I’ll throw some of the 300gb archives on my rig when I get home.

These torrents are not meant for downloading individual books. They are meant for long-term preservation.

What does this mean? If download one of the those torrents it won’t have usable PDFs/Epubs? Just random encoded garbage in some obscure format? So much for preservation. When their website is taken down and they’ll in jail nobody will be able to use the torrents , so why seed them anyways?

The torrents are to preserve the archive as a whole and not individual books or documents. The entire archive is ~263TB’s which is far more storage than most people have in their home. So instead they broke it up into bits that were more palatable for most that when combined make the whole again. Like a huge .rar from back in the day.

I hope you are wrong. A big multi-part archive can’t normally be operated if any part is missing. I hope they do separate zips of a smaller size, like 100gb chunks of random books. By looking at one person’s comment it seems the largest compilations are very unpopular.

IME with libgen torrents, the filenames will be a random number-string generated by their database, and they might have extentions removed or garbled, but generally these files are actual eBooks/Articles/whatever.

You’re reciting their bullshit. I don’t care about the size, what I care is that there’s no documentation on the format they’re using and nobody speaking about how to use those torrents.

Those guys made a lot with their website but that format is totally bullshit. If they truly cared about preservation they wouldn’t be sharing obscure format s instead they would just provide a simple sharded backup of the metadata and files - meaning that anyone could pick one part and the contents would be the actual PDF/ePub files without any special encoding. This approach would’ve been better in multiple ways:

- If most parts are lost the remaining would still be available and usable;

- If the guys vanish from the surface of the planet anyone could easily pick up the available parts and build a new library from them;

- Simple sharded backup means that people could use those torrents to download books as well, just add the torrent and pick the specific file to download. This would make the torrents way more likely to be shared and seeded as most people could afford to keeps parts or specific books they want and not an entire 300GB torrent. For what’s worth individualism and selfishness always win in society, giving people a usable sharded backup would make it more likely to last.

What I see is the the guys running the website are just asking people to share HDD space to keep their database intact in case of legal trouble so they can rebuilt, but they choose to do it in a way that makes it really hard for others to replicate what they’ve build from their files effectively creating a monopoly on their stuff. Too bad this behavior just fucks up the community if they go away and can’t / don’t want to come back.

Edit: let me even got further on this. If they were actually interested in preservation they would just provide all their files as individual torrents/magnets people could use both for downloading and seeding long term. Then they could just provide a file with a list of all magnets or a zip of all torrent files in order to allow seeders to preserve large chunks of the database with minimal effort - import all torrents in folder and done.

of course it will… but downloading 150 TB is overkill if you want one book

As if in 2023 you couldn’t add a torrent and pick individual files to download from it instead of the entire thing.

Perhaps reseeding on I2P is also a good option

RIP whoever ends up getting a copy without knowing of the TBs needed

Yeah, then it can’t be taken down

I’ll set this up this weekend, thanks for the heads up (salute emoji)

🫡

Just got a 300gb torrent to seed for a while. It won’t be on 24/7 but most of the day it will.

Is a seedbox necessary or will qbittorrent with a VPN work?

If you don’t mind leaving your pc on and using bandwidth I don’t see why not?

Even that doesn’t matter, why would you need to keep your PC on. It’s about preserving the data, if it’s only online occasionally it’s still better than nothing.

that works!

deleted by creator

A seedbox is a server instance you rent out specifically for torrenting.

deleted by creator

Functionally your set up is doing the same thing as a seedbox. They are generally thought of as remote and usually have a very good Internet connection. I think people tend to share seedboxes as well.

I guess you could build a computer for the purpose with a very high speed connection and a VPN to match. But I assume people are usually referring to the ones you pay monthly for. They usually make managing private tracker access easier because you have a 24/7 machine with lots of storage and a high speed connection running constantly for keeping your ratio up.

I’d gladly donate a few TB, but Not about to fill my entire array for books i’ll never read…