AI’s really just getting started, isn’t it.

This is the best summary I could come up with:

On Monday, Nvidia unveiled the Blackwell B200 tensor core chip—the company’s most powerful single-chip GPU, with 208 billion transistors—which Nvidia claims can reduce AI inference operating costs (such as running ChatGPT) and energy consumption by up to 25 times compared to the H100.

The news came as part of Nvidia’s annual GTC conference, which is taking place this week at the San Jose Convention Center.

Several major organizations, such as Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI, are expected to adopt the Blackwell platform, and Nvidia’s press release is replete with canned quotes from tech CEOs (key Nvidia customers) like Mark Zuckerberg and Sam Altman praising the platform.

Nvidia’s data center focus has made the company wildly rich and valuable, and these new chips continue the trend.

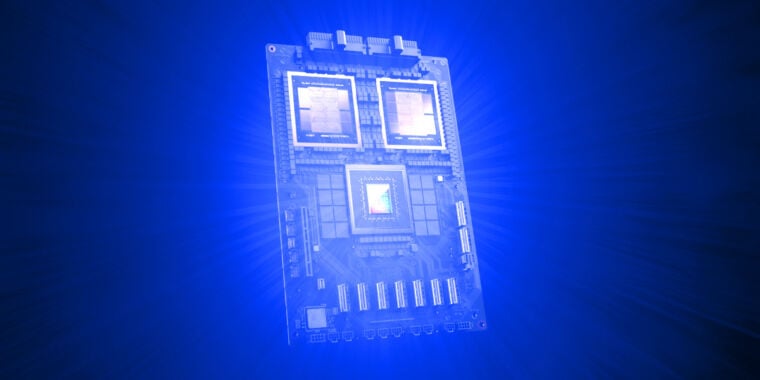

The aforementioned Grace Blackwell GB200 chip arrives as a key part of the new NVIDIA GB200 NVL72, a multi-node, liquid-cooled data center computer system designed specifically for AI training and inference tasks.

It combines 36 GB200s (that’s 72 B200 GPUs and 36 Grace CPUs total), interconnected by fifth-generation NVLink, which links chips together to multiply performance.

The original article contains 620 words, the summary contains 189 words. Saved 70%. I’m a bot and I’m open source!