agilob

- 81 Posts

- 54 Comments

51·4 months ago

51·4 months agoI’m not sure if you understand what swap actually is, because even machines with 1Tb of RAM have swap partitions, just in case read this post from a developer working on swap module in Linux https://chrisdown.name/2018/01/02/in-defence-of-swap.html

11·5 months ago

11·5 months agoThe Linux kernel uses the CPU default scheduler, CFS,

Linux 6.6 (which recently landed on Debian) changed the scheduled to EEVDF, which is pretty widely criticized for poor tuning. 100% busy which means the scheduler is doing good job. If the CPU was idle and compilation was slow, than we would look into task scheduling and scheduling of blocking operations.

21·5 months ago

21·5 months agoEDIT: Tried nice -n +19, still lags my other programs.

yea, this is wrong way of doing things. You should have better results with CPU-pinning. Increasing priority for YOUR threads that interact all the time with disk io, memory caches and display IO is the wrong end of the stick. You still need to display compilation progress, warnings, access IO.

There’s no way of knowing why your system is so slow without profiling it first. Taking any advice from here or elsewhere without telling us first what your machine is doing is missing the point. You need to find out what the problem is and report it at the source.

11·5 months ago

11·5 months agoThe CPU is already 100% busy, so changing number of compilation jobs won’t help, CPU can’t go faster than 100%.

Yeah this survey is super inappropriate and offensive. Please do not ask such personal questions.

Did you notice that more inappropriate questions appear and disappear based on your previous answers?

7015·7 months ago

7015·7 months agoOld issue, so why post it now make it sound like MS demands something?

Opened 11 months ago Last modified 11 months ago

It’s a regression, so ffmpeg should fix a regression.

1·7 months ago

1·7 months agodeleted by creator

Just not in Java…

I think you’re biased against Java. Amazon was started in C/C++ and Java J2EE during times when to configure a webserver required writing like 300 lines of XML just to handle cookies, browser cache and a login page. Until recently BMW had their own JRE implementation. It’s not a secret that simcards, including these in Tesla cars run JavaCard too, even government issues sim cards in EU have to run Java Card, not C++. Everything was always fine with Java until ECMA Script appeared and made people iterate on software versions faster. New programming languages and team organisation methodologies left some programming languages in the dark, but this included C# too. All are quickly catching up. If Java was so bad, it wouldn’t be here with us today, like Perl.

There are two schools:

- the best stack is the one you know best

- the best stack is the one designed for the job

Remember that Google was written in Python and Java. Facebook in PHP. iOS in Objective-C. GitHub in Ruby on Rails.

25·8 months ago

25·8 months agoAfter doing it for 15 years, I must be good at it and everything should be easy.

hidethepainharold.jpg

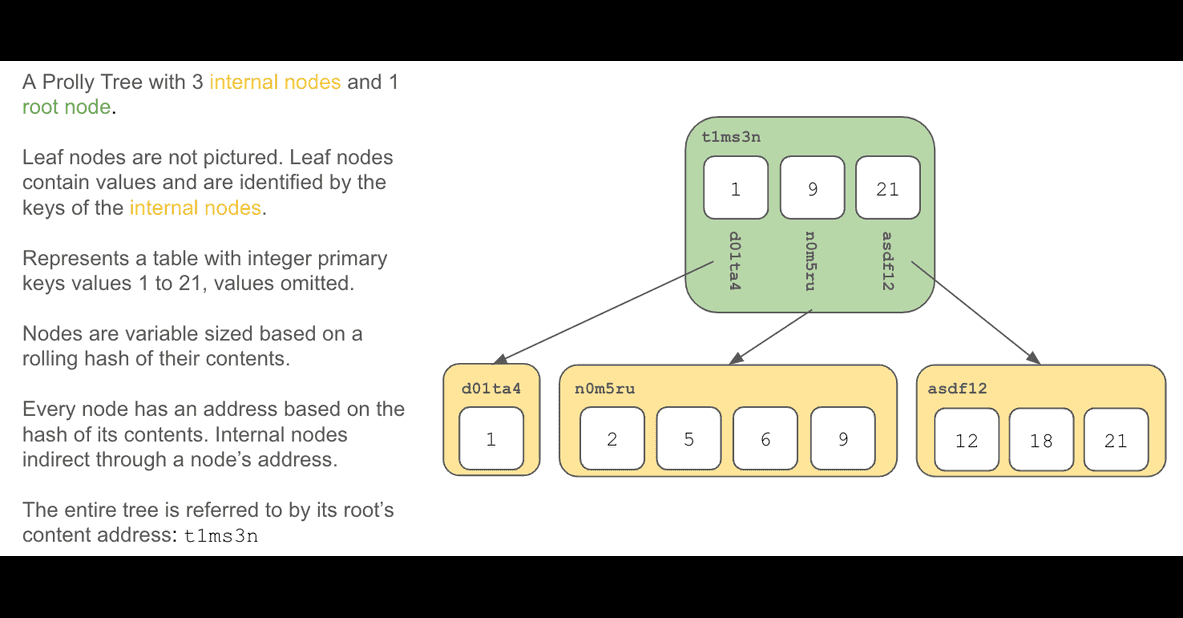

So while I’m myself struggling to fully understand what this is, it conceptually like it’s a blockchain on syncthing, where even if you subscribe to a read only share, you can locally delete what you don’t want to keep. So technically you could make bitorrent to behave like syncthing with search function for contacts you already know.

22·11 months ago

22·11 months agoBig O notation is useless for smaller sets of data. Sometimes it’s worse than useless, it’s misguiding.

I don’t agree that it’s useless or misguiding. The smaller dataset, the less important it is, but it makes massive difference how the rest of the algorithm will be working and changing context around it.

Let’s say that you need to sort 64 ints, in a code that starts our operating system. You need to sort it once per boot, and you boot less frequently than once per day, in fact you know instances of the OS that have 14 years of uptime, so it doesn’t matter at all right? Welp. Now your OS is used by a big cloud provider and they use that code to boot the kernel 13 billions times per day. The context changed, time passed by, your silly bubble sort that doesn’t matter on small numbers is still there.

46·11 months ago

46·11 months agoHeres the blog post about the change dated in June this year

Half year too late for that outrage anyway :)

12538·11 months ago

12538·11 months agoFantastic way to start a shitstorm. You people don’t even use search function logged out, because if you did, you would know they changed it in 2016. Microsoft has nothing to do with it.

66·11 months ago

66·11 months agoYeah, fuck Microsoft. They haven’t changed at all.

GitHub changed that a few months before acquisitions talks even started lol

21·1 year ago

21·1 year agoThere already is µblock that’s only MV3 based https://addons.mozilla.org/en-US/firefox/addon/ublock-origin-lite/ give it a try if you see any difference

You’re telling me about compiling JS, to my story that is so old… I had to check. and yes, JS existed back then. HTTP2? Wasn’t even planned. This was still when IRC communities weren’t sure if LAMP is Perl or PHP because both were equally popular ;)

and actively do damage to companies that don’t.